Backup Performance Testing

Reliable database backups are perhaps the single most important aspect of a Database Administrator’s job. The business risk from data-loss that can occur without a reliable database backup are substantial enough to bring many smaller companies close to bankruptcy. I can’t stress enough the importance of ensuring the reliability of your database backups. Please, test your backups by restoring them onto a test system. Having said that, this blog post is only going to provide a framework for testing the performance of your backup system.

Microsoft SQL Server provides a range of parameters that affect backup performance; including the number of buffers used, the maximum transfer size per I/O operation, the I/O block size, and the backup stripe count. Microsoft provides documentation about these settings here.

The code below takes multiple backups of the target database with various combinations of the parameters listed above. This code, as configured in the example, results in 2400 backups being taken. Results are saved into a global ##temp table for analysis.

NOTE: The backups taken by this process are automatically deleted immediately after the backup completes. Backups are taken with the COPY_ONLY option which does not affect the normal sequence of backups. Since Backup operations are an I/O intensive operation, and this script creates a very large number of backup operations sequentially, you’ll want to ensure you do this either on a non-production system that closely resembles your production system, or if you are doing it on your production system, ensure you do it during a quiet period to avoid impacting client activity. Be warned, this test takes a very long time to run owing to the large number of backups created.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 |

SET NOCOUNT ON; IF OBJECT_ID(N'tempdb..#output', N'U') IS NOT NULL DROP TABLE #output; CREATE TABLE #output ( txt nvarchar(1000) NULL ); IF OBJECT_ID(N'tempdb..##backup_test_results', N'U') IS NOT NULL DROP TABLE ##backup_test_results; CREATE TABLE ##backup_test_results ( rownum int NOT NULL PRIMARY KEY CLUSTERED IDENTITY(1,1) , DBName sysname NOT NULL , StartDate datetime NOT NULL , BackupPath varchar(260) NOT NULL , StripeCount int NOT NULL , [BufferCount] int NOT NULL , [BlockSize] int NOT NULL , [MaxTransferSize] int NOT NULL , [Checksum] int NOT NULL , CopyOnly int NOT NULL , [Compression] int NOT NULL , [Format] int NOT NULL , [Init] int NOT NULL , Duration int NOT NULL , IsDefaultBackup bit NOT NULL --indicates default buffer counts ); --Trace flag 3213 displays backup/restore parameters used. --Trace flag 3604 sends output to the client instead of the errorlog. --DBCC TRACEON(3213, 3604); DECLARE @DebugOnly bit = 0; DECLARE @DatabaseName sysname; DECLARE @BackupPath nvarchar(260); DECLARE @MaxStripes int; DECLARE @CurrentStripes int; DECLARE @BufferCount int; --BUFFERCOUNT DECLARE @BufferCountLoop int; DECLARE @BlockSize int; --BLOCKSIZE (512, 1024, 2048, 4096, 8192, 16384, 32768, and 65536) DECLARE @MaxTransferSize int; --MAXTRANSFERSIZE (64KB to 4MB) DECLARE @Checksum bit; DECLARE @CopyOnly bit; DECLARE @Compression bit; --COMPRESSION or NO_COMPRESSION DECLARE @Format bit; --FORMAT or NOFORMAT DECLARE @Init bit; --INIT or NOINIT DECLARE @DatabaseDeviceCount int; DECLARE @ToClause nvarchar(max); DECLARE @cmd nvarchar(max); DECLARE @StartTime datetime; DECLARE @EndTime datetime; DECLARE @Connector nvarchar(max); DECLARE @DelConnector nvarchar(max); DECLARE @DelCmd nvarchar(max); DECLARE @msg nvarchar(100); SET @MaxStripes = 10; SET @DatabaseName = DB_NAME(); SET @BackupPath = N'C:/Temp/BackupTest_' + @DatabaseName; SET @BufferCount = 1; SET @BlockSize = 512; SET @MaxTransferSize = 1048576; SET @Checksum = 0; SET @CopyOnly = 1; SET @Compression = 1; SET @Format = 1; SET @Init = 1; DECLARE @res TABLE ( [File Exists] bit NOT NULL , [File is a Directory] bit NOT NULL , [Parent Directory Exists] bit NOT NULL ); INSERT INTO @res EXEC sys.xp_fileexist @BackupPath; IF (SELECT r.[File is a Directory] FROM @res r) = 0 BEGIN SET @msg = N'Backup Folder "' + @BackupPath + N'" does not exist.'; RAISERROR (@msg, 18, 1); END ELSE BEGIN --distinct I/O paths, i.e. individual disks, not database files SELECT @DatabaseDeviceCount = COUNT(DISTINCT SUBSTRING(mf.physical_name, 1, CHARINDEX(':', mf.physical_name))) FROM sys.master_files mf WHERE mf.database_id = (SELECT database_id from sys.databases d WHERE d.name = @DatabaseName) AND mf.type_desc = 'ROWS'; IF @DebugOnly = 0 BEGIN /* warm up the I/O path to reduce issues with timimg for first backup */ BACKUP DATABASE @DatabaseName TO DISK = 'NUL' WITH COPY_ONLY; END --buffercount loop (5 iterations) SET @BufferCountLoop = 0; --0 is special case for default number of buffers WHILE @BufferCountLoop < = 128 BEGIN --maxtransfersize loop (6 iterations) SET @MaxTransferSize = 65536; WHILE @MaxTransferSize <= (2 * 1048576) BEGIN --blocksize loop (8 iterations) SET @BlockSize = 512; WHILE @BlockSize <= 65536 BEGIN SET @CurrentStripes = 1 WHILE @CurrentStripes <= @MaxStripes BEGIN SET @Connector = N''; SET @DelConnector = N''; SET @ToClause = N''; SET @DelCmd = N''; DECLARE @i int = 1; WHILE @i <= @CurrentStripes BEGIN SET @ToClause = @ToClause + @Connector + N'DISK = N''' + @BackupPath + N'/' + @DatabaseName + N'_TestBackup_Stripe_' + RIGHT(N'00000000000' + CONVERT(nvarchar(10), @i), 10) + N'.bak'''; SET @DelCmd = @DelCmd + @DelConnector + N'EXEC sys.xp_delete_file 0, ''' + @BackupPath + N'/' + @DatabaseName + N'_TestBackup_Stripe_' + RIGHT(N'00000000000' + CONVERT(nvarchar(10), @i), 10) + N'.bak'';'; SET @Connector = CHAR(13) + CHAR(10) + CHAR(9) + N', '; SET @DelConnector = CHAR(13) + CHAR(10); SET @i += 1; END IF @BufferCountLoop = 0 BEGIN /* default buffer count algorthim (wrapped for readability) https://blogs.msdn.microsoft.com/sqlserverfaq/2010/05/06/ incorrect-buffercount-data-transfer-option-can-lead-to-oom-condition/ */ SET @BufferCount = (@CurrentStripes * 4) + @CurrentStripes + (2 * @DatabaseDeviceCount); END ELSE BEGIN SET @BufferCount = @BufferCountLoop; END SET @cmd = N'BACKUP DATABASE ' + QUOTENAME(@DatabaseName) + N' TO ' + @ToClause + N' WITH BUFFERCOUNT = ' + CONVERT(nvarchar(10), @BufferCount) + N' , BLOCKSIZE = ' + CONVERT(nvarchar(10), @BlockSize) + N' , MAXTRANSFERSIZE = ' + CONVERT(nvarchar(10), @MaxTransferSize) + N' ' + CASE WHEN @Checksum = 1 THEN N', CHECKSUM' ELSE N', NO_CHECKSUM' END + N' ' + CASE WHEN @CopyOnly = 1 THEN N', COPY_ONLY' ELSE N'' END + N' ' + CASE WHEN @Compression = 1 THEN N', COMPRESSION' ELSE N', NO_COMPRESSION' END + N' ' + CASE WHEN @Format = 1 THEN N', FORMAT' ELSE N', NO_FORMAT' END + N' ' + CASE WHEN @Init = 1 THEN N', INIT' ELSE N', NO_INIT' END + N' , STATS = 10'; PRINT @cmd; PRINT N''; PRINT @DelCmd; IF @DebugOnly = 0 BEGIN SET @StartTime = GETDATE(); --INSERT INTO #output (txt) EXEC sys.sp_executesql @cmd; SET @EndTime = GETDATE(); INSERT INTO ##backup_test_results (DBName, StartDate, BackupPath, StripeCount, [BufferCount] , [BlockSize], [MaxTransferSize], [Checksum], CopyOnly, [Compression], [Format], [Init] , Duration, IsDefaultBackup) VALUES (@DatabaseName, @StartTime, @BackupPath, @CurrentStripes, @BufferCount, @BlockSize , @MaxTransferSize, @Checksum, @CopyOnly, @Compression, @Format, @Init , DATEDIFF(MILLISECOND, @StartTime, @EndTime), CASE WHEN @BufferCountLoop = 0 THEN 1 ELSE 0 END); PRINT N'Duration in MILLISECONDS: ' + CONVERT(nvarchar(10), DATEDIFF(MILLISECOND, @StartTime, @EndTime)); IF @DelCmd <> '' BEGIN INSERT INTO #output (txt) EXEC sys.sp_executesql @DelCmd; END END SET @CurrentStripes += 1; PRINT N''; END SET @BlockSize = @BlockSize * 2; END SET @MaxTransferSize = @MaxTransferSize * 2; END PRINT '==============================================================================================='; SET @BufferCountLoop = @BufferCountLoop * 2; IF @BufferCountLoop = 0 SET @BufferCountLoop = 4; --reset the special case so we can try non-default-buffer-count backups END END --DBCC TRACEOFF(3213, 3604); |

You’ll see output similar to this while running the code:

Processed 33976 pages for database 'Test', file 'Test_DB' on file 1.

Processed 10792 pages for database 'Test', file 'MyFile' on file 1.

Processed 1 pages for database 'Test', file 'Test_Log' on file 1.

BACKUP DATABASE successfully processed 44769 pages in 3.297 seconds (106.081 MB/sec).

BACKUP DATABASE [Test]

TO DISK = N'C:/Temp/BackupTest_Test/Test_TestBackup_Stripe_0000000001.bak'

WITH BUFFERCOUNT = 7

, BLOCKSIZE = 512

, MAXTRANSFERSIZE = 65536

, NO_CHECKSUM

, COPY_ONLY

, COMPRESSION

, FORMAT

, INIT

, STATS = 10

EXEC sys.xp_delete_file 0, 'C:/Temp/BackupTest_Test/Test_TestBackup_Stripe_0000000001.bak';

10 percent processed.

20 percent processed.

30 percent processed.

40 percent processed.

50 percent processed.

60 percent processed.

70 percent processed.

80 percent processed.

90 percent processed.

Processed 33976 pages for database 'Test', file 'Test_DB' on file 1.

Processed 10792 pages for database 'Test', file 'MyFile' on file 1.

100 percent processed.

Processed 1 pages for database 'Test', file 'Test_Log' on file 1.

BACKUP DATABASE successfully processed 44769 pages in 6.351 seconds (55.070 MB/sec).

Duration in MILLISECONDS: 6390

BACKUP DATABASE [Test]

TO DISK = N'C:/Temp/BackupTest_Test/Test_TestBackup_Stripe_0000000001.bak'

, DISK = N'C:/Temp/BackupTest_Test/Test_TestBackup_Stripe_0000000002.bak'

WITH BUFFERCOUNT = 12

, BLOCKSIZE = 512

, MAXTRANSFERSIZE = 65536

, NO_CHECKSUM

, COPY_ONLY

, COMPRESSION

, FORMAT

, INIT

, STATS = 10

EXEC sys.xp_delete_file 0, 'C:/Temp/BackupTest_Test/Test_TestBackup_Stripe_0000000001.bak';

EXEC sys.xp_delete_file 0, 'C:/Temp/BackupTest_Test/Test_TestBackup_Stripe_0000000002.bak';

You can check the global temporary table while the code is running to see progress:

|

1 2 |

SELECT * FROM ##backup_test_results;</code><code> |

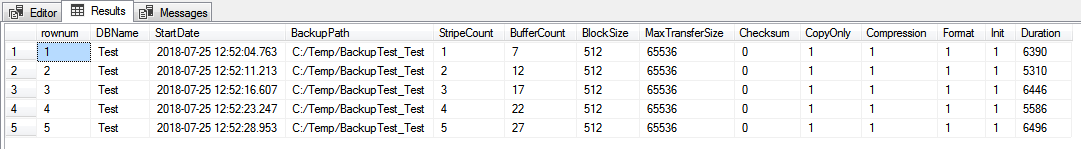

Results look like this:

I use two pivots to analyse the full results. The first pivot looks at the results where the buffer count is the default count chosen by SQL Server. The second shows the non-default buffer counts:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

SELECT p.DBName , BufferCount = 'Default' , p.MaxTransferSize , p.BlockSize , p.[1], p.[2], p.[3], p.[4], p.[5], p.[6], p.[7], p.[8], p.[9], p.[10] , AvgDuration = (p.[1]+ p.[2]+ p.[3]+ p.[4]+ p.[5]+ p.[6]+ p.[7]+ p.[8]+ p.[9]+ p.[10]) / 10 FROM ( SELECT btr.DBName , btr.BlockSize , btr.MaxTransferSize , btr.StripeCount , btr.Duration FROM ##backup_test_results btr WHERE btr.IsDefaultBackup = 1 ) src PIVOT ( MIN(src.Duration) FOR src.StripeCount IN ( [1], [2], [3], [4], [5], [6], [7], [8], [9], [10] ) ) p ORDER BY p.DBName , p.MaxTransferSize , p.BlockSize; SELECT p.DBName , p.BufferCount , p.MaxTransferSize , p.BlockSize , p.[1], p.[2], p.[3], p.[4], p.[5], p.[6], p.[7], p.[8], p.[9], p.[10] FROM ( SELECT btr.DBName , btr.BufferCount , btr.BlockSize , btr.MaxTransferSize , btr.StripeCount , btr.Duration FROM ##backup_test_results btr WHERE btr.IsDefaultBackup = 0 ) src PIVOT ( MIN(src.Duration) FOR src.StripeCount IN ( [1], [2], [3], [4], [5], [6], [7], [8], [9], [10] ) ) p ORDER BY p.DBName , p.BufferCount , p.MaxTransferSize , p.BlockSize; |

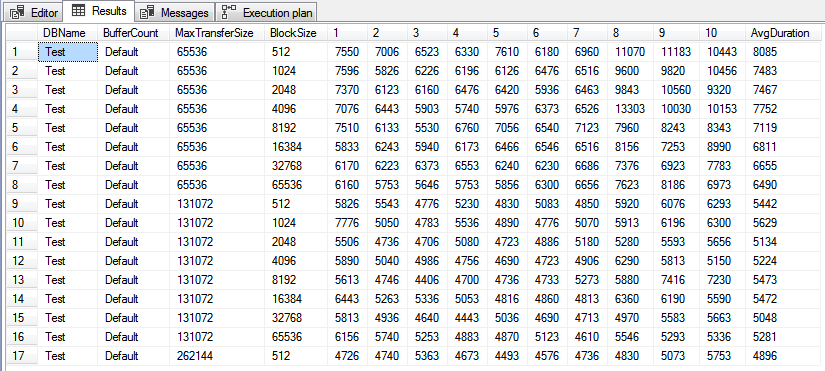

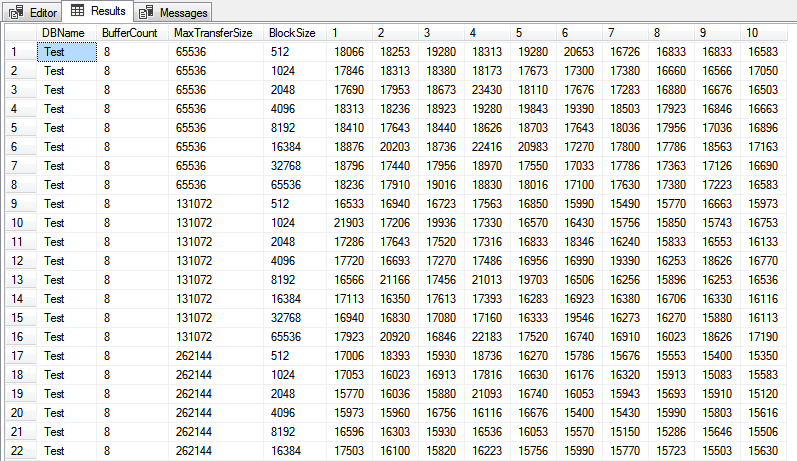

The pivoted results look like this:

The columns numbered 1 through 10 show the duration in milliseconds for each set of parameters by stripe-count.

The sample results shown above seem to indicate there is no clear preference from any of the settings; in my case this test was ran against a reasonably small database over the LAN. Both the backup target folder and the database files reside on busy storage area networks (SANs) that appear to be masking the effects of modifying the parameters tested by my code. Overall, there seems to be very little difference in duration between any of the backups taken against my test database. In this case, it makes sense to leave the defaults in place, and simply do a BACKUP DATABASE xxx TO DISK = '\path\to\backup';. Pretty clearly, with different hardware setups, this testing rig may show certain settings are clear winners over the defaults.

FYI, Microsoft has made some significant improvements to backup speed in SQL Server 2017 for databases on large, high-end servers. Read about that on this MSDN blog post.

Thoughts? Questions? Did I do something wrong? Let me know in the comment section below.

Nice testing script! It’s too bad the results didn’t end up showing any interesting differences. One thing I noticed was your comment before the backup to NUL that said “read the database into memory.” I thought it would be surprising if backups impacted the buffer pool like that, and sure enough Paul Randal confirms my theory, so thought I’d pass it along:

“And backups don’t use the buffer pool at all so they’re not going to cause buffer pool flushing either.”

Source: https://www.sqlskills.com/blogs/paul/buffer-pool-disfavoring/

Thanks! I’ll update the post to reflect those details.

I changed the comment 😉

Haha looks good 😛 Honestly, it’s probably a good way to baseline your “reading pages from disk” overhead before doing real backups. So I think it definitely makes sense to leave it in.

The tuning parameters in SQL Server backups mostly apply to large storage systems with parallel IO paths and multiple IO volume, and then this was when storage was on HDD arrays.

Example: say there are 4 IO channels to the data files and that at some maxtransfersize, its take 10 simultaneous IOs to saturate that channel, then setting buffer count to 40 might be good.

Furthermore, back when storage was on HDD, great backup performance could be achieved when data was on one set of volumes, and backups were on another set, and that these are different sets of underlying physical disks, a condition that SAN admin would absolutely refuse to provide. Otherwise, we lose the ability to benefit from the great sequential (or large block) IO characteristics of HDDs, instead generating two concurrent IO streams to different locations on the HDD, resulting in random IO performance.

My input was that the object was not maximum possible backup performance, but rather high backup performance while still providing a SQL Server responsive to user queries. This can be achieved by looking for the knee in the IO MB/s and latency. This is where about 85-90% of peak IO is achieved, but the disks are at queue depth 1 in latency characteristics.

You might think all this is moot with SSD storage, except that most consumer grade SSDs have a bathtub performance characteristics. Pure sequential (large block) read performance is great (550MB/s) and so it pure large block write, possibly 300-400MB/s, until the ready blocks are exhausted. But the performance with a mixed read/write sucks.

It does not make sense to buy high performance enterprise SSDs for you laptop or even desktop. So the strategy of one SSD for data and another for the backup destination makes sense.

Consider a NVMe PCI-E SSD for the data (up to 3.5GB/s) and a SATA SSD for backup (400MB/s) on the assumption that your data might be 3-4:1 compressible. This is not an optimal match, but not many notebooks accommodate 2 PCI-E NVMe SSDs.

Thanks for those details, Joe!

I performed this test on a server that I am preparing for production. It had the ideal conditions, nothing else being done on the server other than this test. I started with the master database. It took about 30 minutes to complete. The results were inconclusive and look similar to your results.

Next, I preformed the test against a 10GB database. It ran for about 8.5 hours before I lost my connection to SQL. As it was running, I was examining the results being produced. Backups were running from as low as 30 seconds, but as long as a minute.

I realized that my tests were not in a completely isolated environment. My destination for the backups are on a NAS over the network. I was seeing variances that I could correlate to other activity on the NAS. Although I do not have a way to correlate network activity, I am sure it had some effect on the tests. As a result of these factors, for a valid test, I would need to either find a way to completely isolate my tests or perform this test many times to get a good average.

The methodology of measuring the time difference between times captured before and after seems like a reasonable method. The backup command produces a summary at the completion of the process. An example: “BACKUP DATABASE successfully processed 1100203 pages in 53.911 seconds (159.435 MB/sec).” The time difference in this example was 54193ms. That’s a difference of 300ms. In the tests that I performed, I have seen as high as almost 500ms difference. For a small database, such as master, this difference it large enough to skew the results. The test would need to be performed against a database that is large enough that a 500ms skew is trivial.

Based on my limited testing, I believe the size of the database has an effect on the optimum backup settings. I would want to test against sizes such as 10GB, 100GB, 500GB, and 1TB. But, this would probably take weeks of testing. Capturing the three metrics the backup command produces in its summary would be great for analysis.

Thanks for your time and effort you put into writing the test script and this article! Although I discovered problems in the methodology, it was a good exercise and a great learning opportunity!

Great points, and thanks for the reply. Yes, bigger databases are definitely a must for reducing errors in timing.